ByteDance Seed Agent Model UI-TARS-1.5 Open Source: Achieving SOTA Performance in Various Benchmarks

ByteDance Seed Agent Model UI-TARS-1.5 Open Source: Achieving SOTA Performance in Various Benchmarks

Date

2025-04-17

Category

Technology Launch

Today, we are pleased to announce the release and open-source availability of UI-TARS-1.5, a multimodal intelligent agent built from vision-language models, capable of executing diverse tasks efficiently in a virtual context.

UI-TARS-1.5 has achieved state-of-the-art (SOTA) performance across seven conventional GUI benchmarks, and for the first time, it has demonstrated its long-term reasoning capacity in gaming scenarios and its interactive abilities in open environments.

GitHub:https://github.com/bytedance/UI-TARS

Website:https://seed-tars.com/

UI-TARS-1.5, which is based on our initial intelligent agent solution UI-TARS, has been significantly improved through reinforcement learning techniques to enhance its higher-order reasoning capabilities. This allows the model to "think" before taking any "action".

We have also seen a substantial improvement in the model's reasoning capability to generalize in unknown environments and tasks, allowing UI-TARS-1.5 to outperform previous leading models in several key benchmarks.

UI-TARS-1.5's performance across multiple benchmarks

We put forth a new vision for version 1.5: leveraging gaming as a medium to enhance the foundation model's reasoning abilities. Unlike fields such as mathematics and programming, gaming tasks require intuitive, commonsense reasoning and less reliance on specialized knowledge, positioning games as an ideal testing ground to gauge and enhance the generalization capabilities of future models.

UI-TARS-1.5 performs interactive gaming tasks through the "think-then-act" strategy, simulating human activity

1. Integration of reinforcement learning to achieve SOTA performance in multiple benchmarks

UI-TARS is a native GUI intelligence agent capable of performing real operations on computer and mobile systems, controlling browsers, and completing complex interactive tasks.

Here are a few use cases of UI-TARS-1.5:

- Case1:Assist users in collecting email information and filling out forms

- Case2:Recognize content from images and rename files accordingly

- Case3:Download images from browsers and compress them as specified

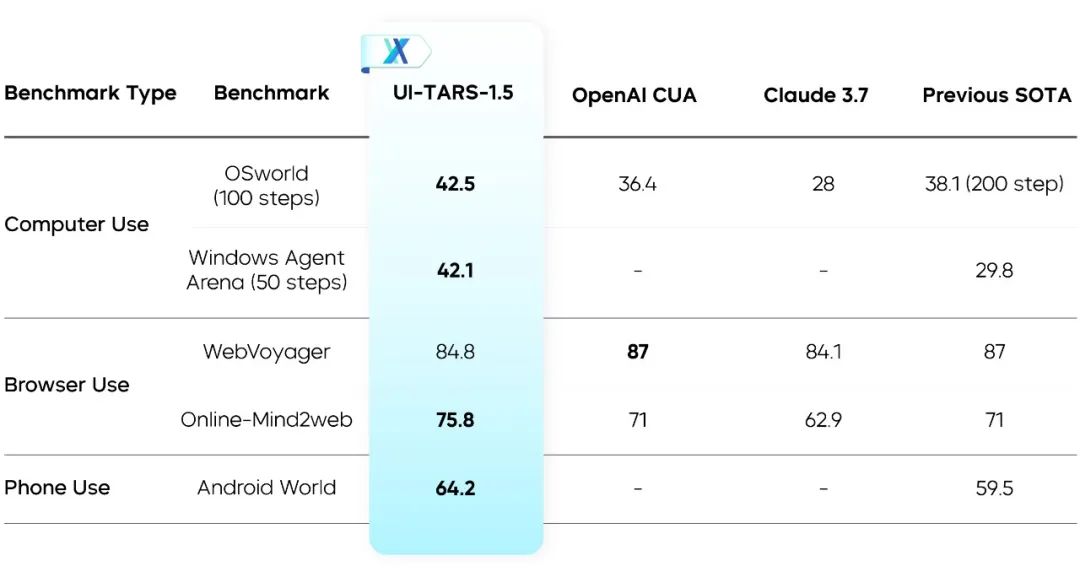

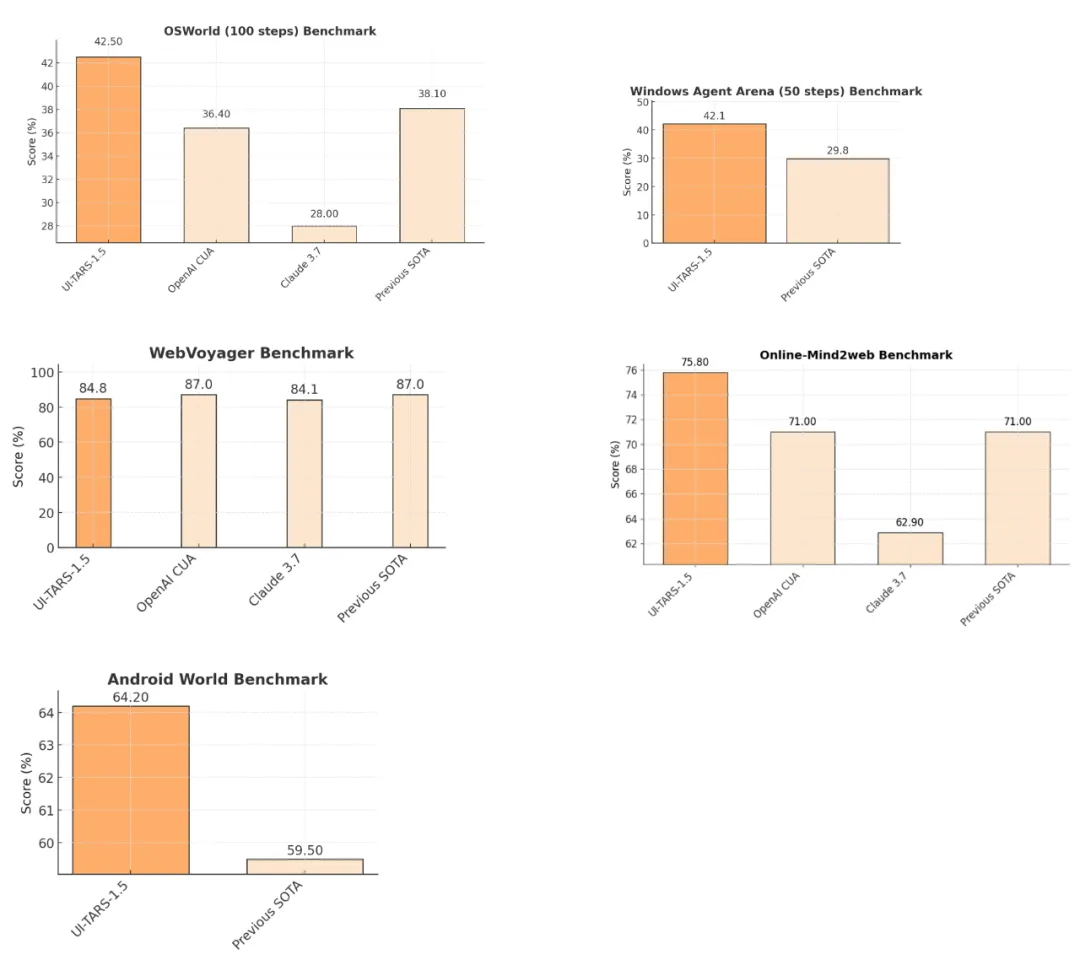

In assessments of seven typical GUI usability metrics, UI-TARS-1.5 showed exceptional performance:

Evaluation of UI-TARS-1.5 GUI usability

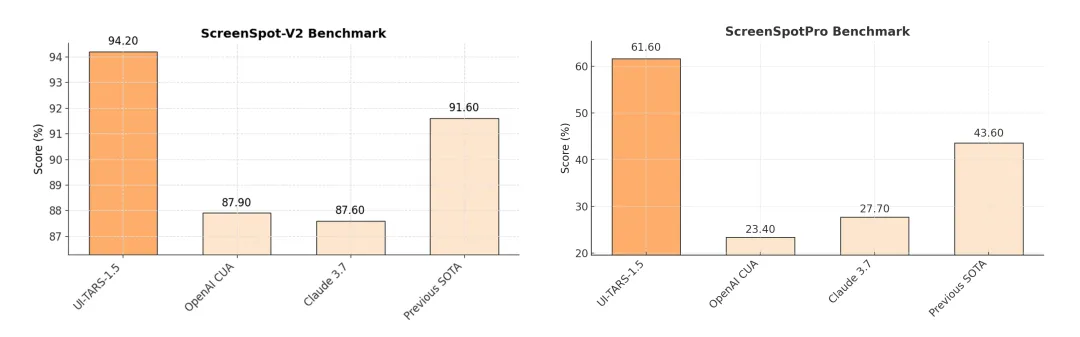

Version 1.5 shows marked progress in GUI Grounding capabilities, which are pivotal for UI-TARS-1.5 to execute a variety of complex tasks effectively. Particularly, UI-TARS-1.5 achieved a high accuracy of 61.6% in the challenging ScreenSpotPro assessment, surpassing Claude (27.7%), CUA (23.4%), and prior best models (43.6%).

Evaluation of UI-TARS-1.5 GUI Grounding capability

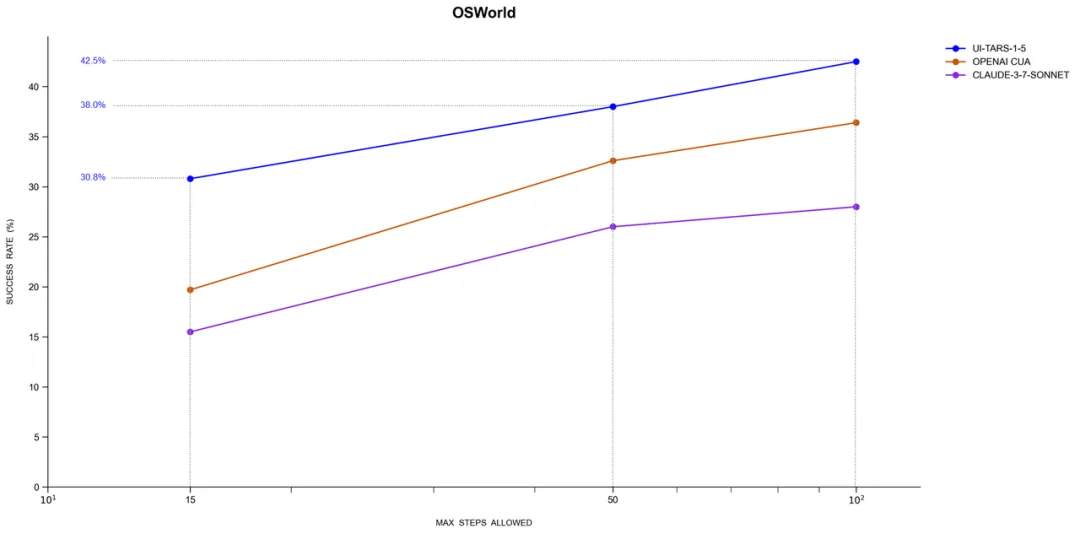

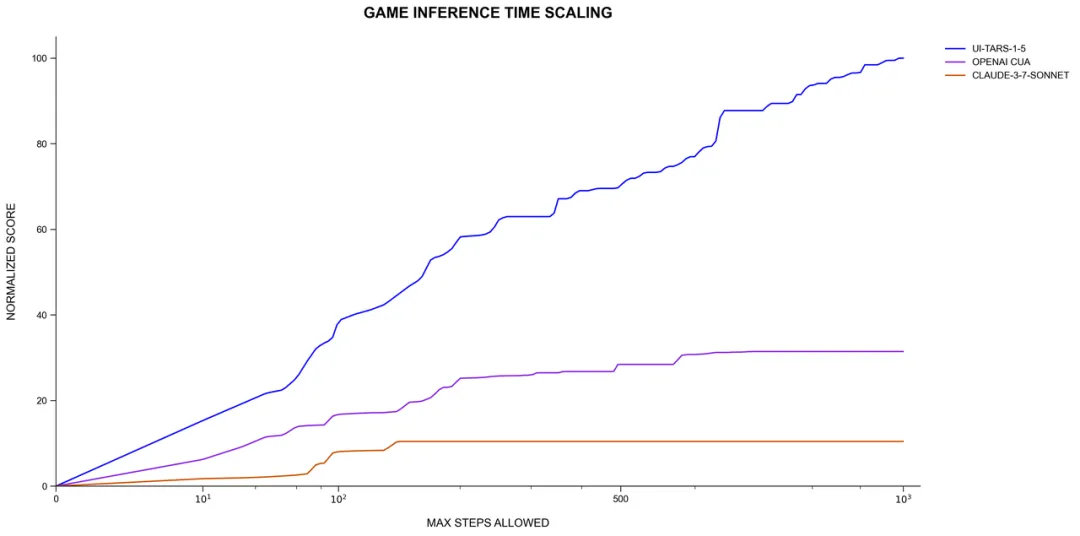

Moreover, UI-TARS-1.5 demonstrates continual improvement with increased interaction rounds during its inference process (Inference-time Scaling), becoming increasingly smarter with more usage. It also shows impressive stability and generalization capabilities, particularly in long-horizon tasks.

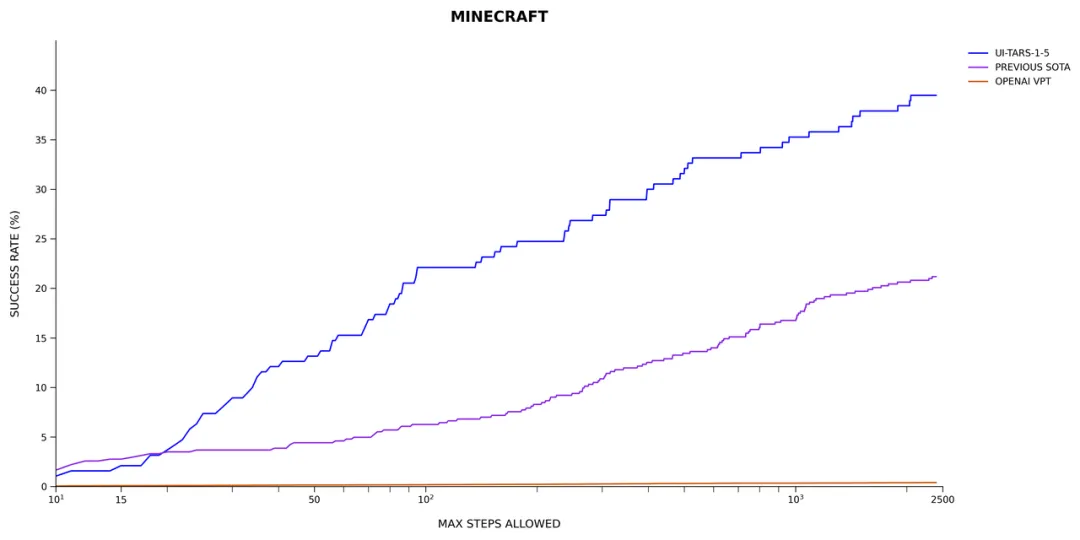

Inference-time scaling performance(Horizontal axis represents model's maximum steps, vertical axis represents model performance)

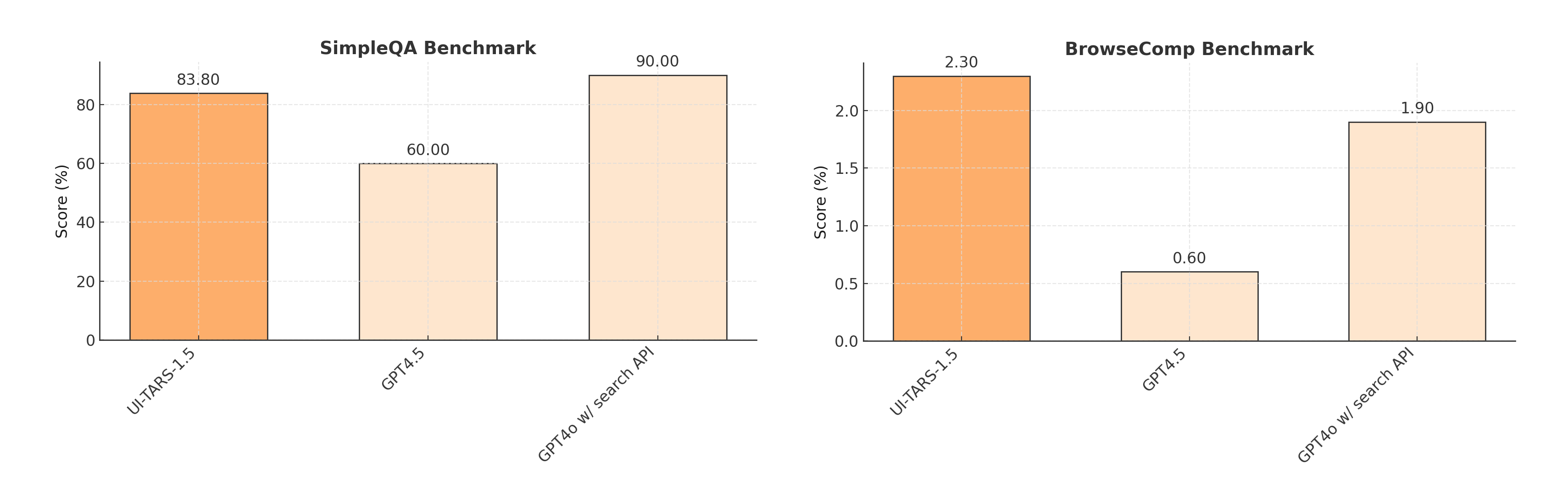

Although UI-TARS-1.5 is not specifically designed for deep research tasks like information retrieval, it still demonstrates strong generalization ability in two challenging web browsing scenarios, highlighting its potential as a versatile AI interface agent.

Performance of UI-TARS-1.5 in web browsing tasks

2. Long-term reasoning in gaming and open-ended interaction mechanics in Minecraft

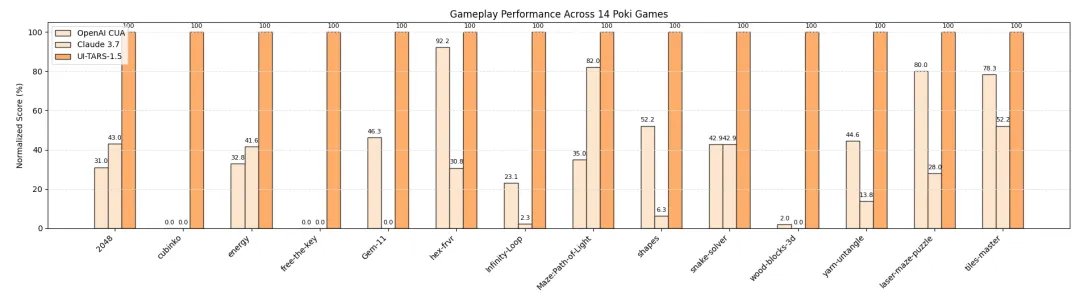

Game tasks are the "touchstone" for the reasoning and strategy-planning abilities of multimodal intelligent agents. We selected 14 different styled mini-games from poki.com for testing, with the model allowed up to 1000 interactive steps per game.

Case 1: Maze_Path-of-Light

Case 2: Energy

Case 3: Yarn-untangle

We standardized each game's score to a range of 0-100 and used the highest score as the reference standard, ultimately generating an overall average score.

Performance of UI-TARS-1.5 across multiple games

UI-TARS-1.5 not only excels in gaming tasks but also displays stable inference-time scalability.

Expansion ability of UI-TARS-1.5 during inference

Additional evaluation of UI-TARS-1.5 took place within the open-world Minecraft game environment. Unlike static GUI evaluations, Minecraft requires the model to make real-time decisions based on visual inputs in dynamic three-dimensional spaces.

Case 1

Case 2

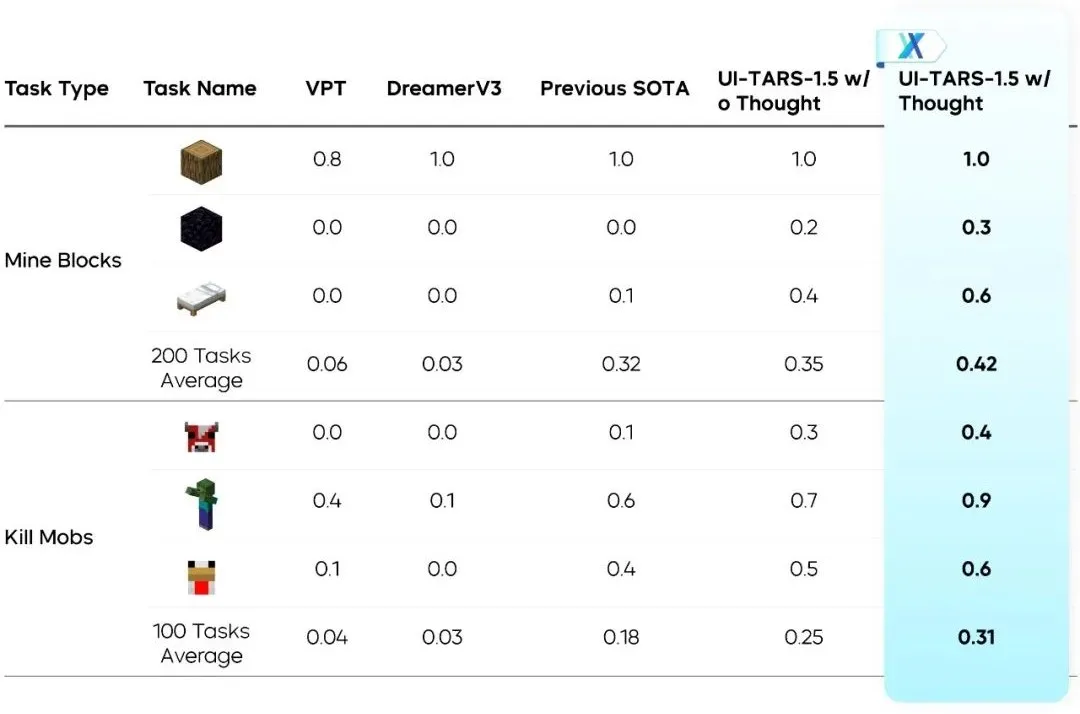

Using the MineRL (v1.16.5) standard evaluation tasks, we compared UI-TARS-1.5's performance with AI agents such as OpenAI VPT, DeepMind DreamerV3, and JARVIS-VLA in two tasks:

- Mine Blocks: Locate and destroy specified blocks

- Kill Mobs: Search for and defeat hostile creatures

All evaluations were conducted under the same conditions: visual input only (no state information), original mouse and keyboard operations, first-person perspective.

Performance of UI-TARS-1.5 in MineRL

UI-TARS-1.5 obtains the highest success rates in both tasks, especially with the "thinking model" activated, validating the efficacy of our proposed "think-then-act" strategy.

Likewise, UI-TARS-1.5 shows great scalability within Minecraft: increased interaction rounds correlate positively with task performance. The model can continuously optimize its strategies during interactions and leverage prior experience to guide future decisions.

Performance of UI-TARS-1.5 scalability within Minecraft

3. Behind UI-TARS-1.5: A native intelligent agent model capable of thinking before acting

UI-TARS-1.5 achieves precise GUI operations due to the team's technological advancements in four key areas:

- Enhanced visual perception: Using expansive datasets of interface screenshots, the model comprehends element semantics and context, forming accurate descriptions.

- System 2 reasoning mechanism: generating "thought" sequences before action, supporting intricate multi-step planning and decision-making.

- Unified action modeling: Creation of a standardized action space across platforms, enhancing controllability and execution precision through learning from actual trajectories.

- Self-evolving training paradigm: By automatically collecting interaction trajectories and applying reflective training, the model continuously improves from errors and adapts to evolving complex environments.

General large models often encounter obstacles such as "insufficient operational detail" and "unclear goal alignment" in GUI contexts. UI-TARS efficiently addresses these gaps through vision-action joint optimization.

For instance, general models may misunderstand requests like "enlarge the font" and perform incorrect operations, whereas UI-TARS can swiftly locate the "settings" entry and reason out the correct path based on existing knowledge to complete tasks precisely.

UI-TARS differs from industry-standard intelligent agents. In addition to performing tasks based on the coordination between vision capabilities and reinforcement learning, UI-TARS features a "thought" process to bridge reasoning and action, ensuring coherent behavioral logic and reliable responses.

In technological explorations, the UI-TARS team offers the following insights into intelligent agent evolution:

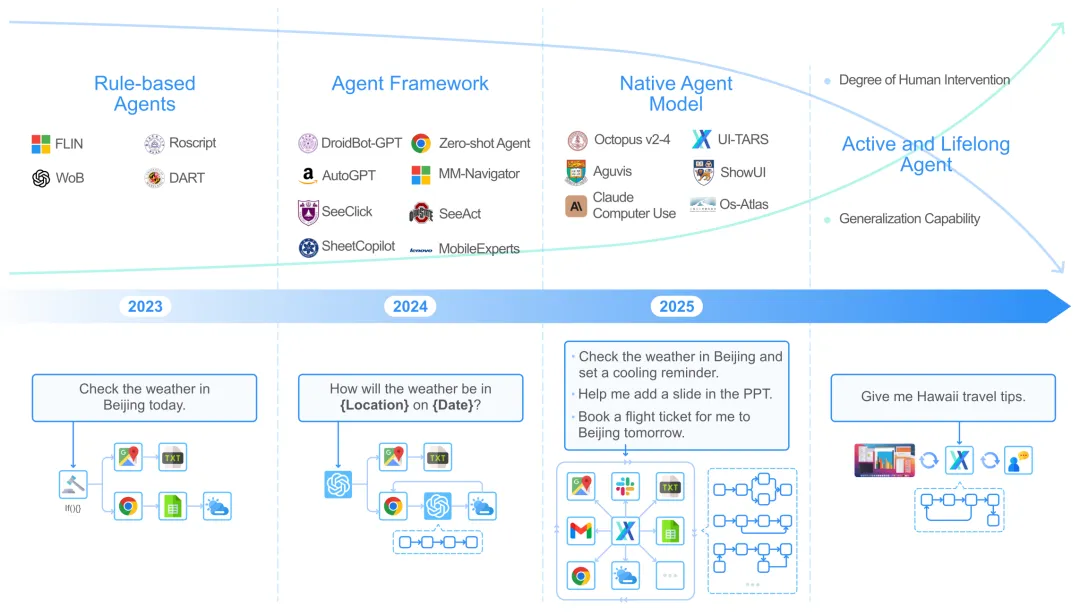

① From framework integration to unified model: The key to autonomous adaptation

The evolution of intelligent agents is shifting its focus from frameworks to integrated models.

Evolution of intelligent agents

In the early days, agent frameworks relied on external component combinations with foundation large models, offering flexibility but encountering issues of weak adaptability, high coupling, and elevated maintenance costs.

UI-TARS belongs to the "agent model" category, featuring an integrated perception-reasoning-memory-action structure. It continuously accumulates knowledge during training, resulting in superior generalization and adaptability.

This "data-driven" closed-loop paradigm makes UI-TARS no longer reliant on manual rules and prompt engineering. It also eliminates the need to repeatedly set interaction steps, significantly lowering the development threshold.

② System 2: The key to unlocking deep cognitive power

Drawing inspiration from the psychological theories of "System 1 & 2," UI-TARS preserves System 1's fast, intuitive responses while integrating System 2's deeper cognitive functions for planning and reflection.

This is achieved via the reasoning trace before action execution, mimicking human thought processes for logical task completion.

This allows UI-TARS to go beyond merely executing instructions, truly comprehending and achieving the task goals.

③ Learning mechanism: The key to continuous iteration

UI-TARS core architecture includes perception, action, memory, and reasoning modules. The perception module constitutes structured data involving UI types, locations, visual features, and function properties. The actions module unifies control semantics across differing platforms to craft a standardized operating space. The memory module can record and use historical interaction to improve behavior, while the reasoning module integrates task instructions, visual observations, and historical trajectories for reflection and replanning.

Finally, the UI-TARS primary reasoning module produces "thought-action" sequences using multimodal models and continuously refines itself through feedback from real-world environments.

4. Conclusion

As the preview version of UI-TARS-2.0, UI-TARS-1.5 has demonstrated promising potential within the GUI intelligent agent domain through reinforcement learning pathways. Although developing a dynamic environment-based end-to-end reinforcement learning system remains complex and challenging, we firmly believe it is a strenuous yet correct approach.

Going forward, we will persist in leveraging reinforcement learning to enhance UI-TARS's performance in intricate tasks, aiming to achieve human-level proficiency. In the meantime, we will improve the user experience of UI-TARS, enhancing interaction smoothness and enriching capabilities.