23 papers featured, 2 interactive sessions—ByteDance Seed invites you to ICLR 2025!

23 papers featured, 2 interactive sessions—ByteDance Seed invites you to ICLR 2025!

Date

2025-04-23

Category

Academic Collaboration and Events

ICLR 2025 is set to kick off in Singapore! As one of the premier academic conferences in the field of machine learning, ICLR brings together researchers and industry professionals from around the globe.

This year, the ByteDance Seed team has 23 contributions accepted or invited for presentation, including 1 Oral and 1 Spotlight, covering a wide range of topics such as LLM inference optimization, image generation, vision-language alignment, and visual autoregression.

During the event, we'll host a booth at area L07, showcasing cutting-edge technologies like Seedream 3.0, UI-TARS, and the Doubao real-time speech model. Technical leads and researchers from different areas of the Seed team will be on-site to share insights and engage with you!

On-site Events

• verl Expo Talk

Time: April 26, 13:00 - 14:00 (GMT+8)

Location: Peridot 202 - 203

Topic: verl: Flexible and Efficient Infrastructures for Post-training LLMs Join the core contributors of the open-source RLHF framework, verl, as they share insights into its technical development and practical implementation experiences.

• 1st Open Science for Foundation Models (SCI-FM) Workshop

Time: April 28, 09:00 - 17:10 (GMT+8)

Location: Hall 4 #5

Overview: Co-organized by ByteDance Seed, the inaugural SCI-FM Workshop focuses on open-source research and transparency in foundation models, covering key topics such as dataset curation, training strategies, and evaluation methodologies. The workshop will bring together scholars from around the world to explore and advance the openness and development of foundation models. For more details, visit: https://open-foundation-model.github.io/

Featured Papers

We have curated 6 featured papers that were either accepted or invited for presentation:

Paper URL:https://arxiv.org/pdf/2502.20766

Presentation Schedule:April 24, 10:30–12:00, Oral Session 1A

Large language models (LLMs) encounter computational challenges during longsequence inference, especially in the attention pre-filling phase, where the complexity grows quadratically with the prompt length. Previous efforts to mitigate these challenges have relied on fixed sparse attention patterns or identifying sparse attention patterns based on limited cases. However, these methods lacked the flexibility to efficiently adapt to varying input demands.

In this paper, we introduce FlexPrefill, a Flexible sparse Pre-filling mechanism that dynamically adjusts sparse attention patterns and computational budget in real-time to meet the specific requirements of each input and attention head. The flexibility of our method is demonstrated through two key innovations:

- Query-Aware Sparse Pattern Determination: By measuring Jensen-Shannon divergence, this component adaptively switches between query-specific diverse attention patterns and predefined attention patterns.

- Cumulative-Attention Based Index Selection: This component dynamically selects query-key indexes to be computed based on different attention patterns, ensuring the sum of attention scores meets a predefined threshold.

FlexPrefill adaptively optimizes the sparse pattern and sparse ratio of each attention head based on the prompt, enhancing efficiency in long-sequence inference tasks. Experimental results show significant improvements in both speed and accuracy over prior methods, providing a more flexible and efficient solution for LLM inference.

Paper URL:https://arxiv.org/pdf/2409.16211

Code Repository :https://github.com/markweberdev/maskbit

Masked transformer models for class-conditional image generation have become a compelling alternative to diffusion models. Typically, comprising two stages – an initial VQGAN model for transitioning between latent space and image space, and a subsequent Transformer model for image generation within latent space – these frameworks offer promising avenues for image synthesis.

In this study, we present two primary contributions: Firstly, an empirical and systematic examination of VQGANs, leading to a modernized VQGAN. Secondly, a novel embedding-free generation network operating directly on bit tokens – a binary quantized representation of tokens with rich semantics.

The first contribution furnishes a transparent, reproducible, and high-performing VQGAN model, enhancing accessibility and matching the performance of current state-of-the-art methods while revealing previously undisclosed details.

The second contribution demonstrates that embedding-free image generation using bit tokens achieves a new state-of-the-art FID of 1.52 on the ImageNet 256 × 256 benchmark, with a compact generator model of mere 305M parameters.

Paper URL:https://arxiv.org/pdf/2312.10300

Code Repository :https://github.com/bytedance/Shot2Story

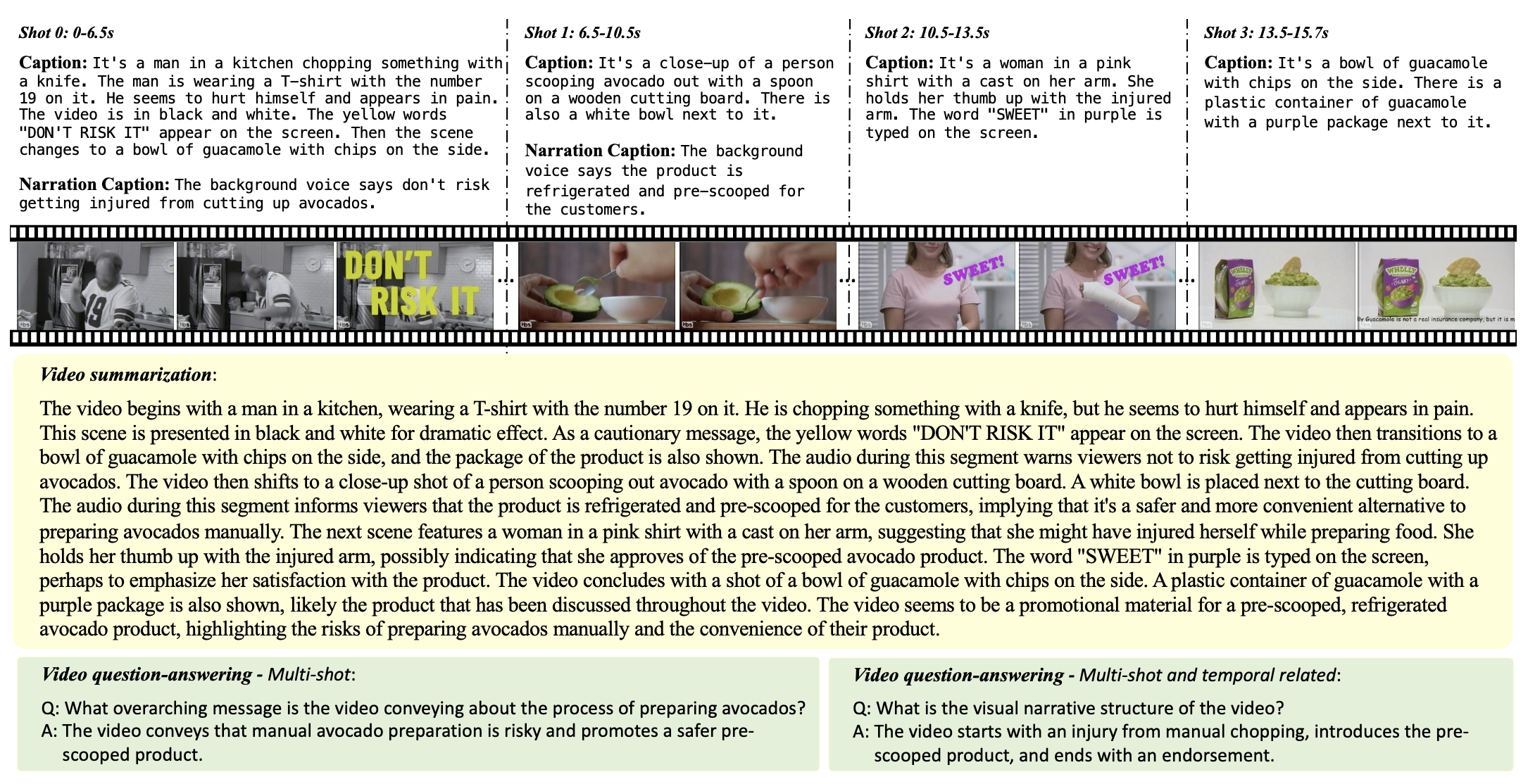

A short clip of video may contain progression of multiple events and an interesting storyline. A human need to capture both the event in every shot and associate them together to understand the story behind it. In this work, we present a new multishot video understanding benchmark Shot2Story with detailed shot-level captions, comprehensive video summaries and question-answering pairs.

Shot2Story Annotation Example: Single-shot visual and speech descriptions, multi-shot video summarization, and Q&A pairs

To facilitate better semantic understanding of videos, we provide captions for both visual signals and human narrations. We design several distinct tasks including single-shot video captioning, multi-shot video summarization, and multi-shot video question answering.

Preliminary experiments show some challenges to generate a long and comprehensive video summary for multi-shot videos. Nevertheless, the generated imperfect summaries can already achieve competitive performance on existing video understanding tasks such as video question-answering, promoting an underexplored setting of video understanding with detailed summaries.

Paper URL:https://arxiv.org/pdf/2410.18745

Advancements in distributed training and efficient attention mechanisms have significantly expanded the context window sizes of large language models (LLMs). However, recent work reveals that the effective context lengths of open-source LLMs often fall short, typically not exceeding half of their training lengths.

In this work, we attribute this limitation to the left-skewed frequency distribution of relative positions formed in LLMs pretraining and post-training stages, which impedes their ability to effectively gather distant information.

To address this challenge, we introduce ShifTed Rotary position embeddING (STRING). STRING shifts welltrained positions to overwrite the original ineffective positions during inference, enhancing performance within their existing training lengths.

Experimental results show that without additional training, STRING dramatically improves the performance of the latest large-scale models, such as Llama3.1 70B and Qwen2 72B, by over 10 points on popular long-context benchmarks RULER and InfiniteBench, establishing new state-of-the-art results for open-source LLMs. Compared to commercial models, Llama 3.1 70B with STRING even achieves better performance than GPT-4-128K and clearly surpasses Claude 2 and Kimi-chat.

Paper URL:https://arxiv.org/pdf/2406.07537

Code Repository :https://github.com/bytedance/Shot2Story

Recently, the computer vision community has applied the state-space model Mamba to various tasks, though its scalability and training efficiency still require improvement. To address this, we propose a novel autoregressive visual pretraining method called Autoregressive Mamba (ARM), which integrates Mamba’s linear attention mechanism with a stepwise autoregressive modeling strategy to significantly enhance efficiency and performance in visual tasks.

By grouping adjacent image patches into prediction units, ARM reduces computational complexity while accelerating training speed. Experiments demonstrate that ARM achieves 85.0% accuracy on ImageNet with an input size of 224×224, improving to 85.5% when scaled to 384×384—substantially outperforming other Mamba variants. Additionally, ARM exhibits stronger robustness and generalization in out-of-domain image testing (e.g., ImageNet-A, ImageNet-R, etc.). Its training efficiency is twice as high as contrastive learning and masked modeling methods, completing benchmark pretraining in just 34 hours.

The development of ARM establishes a foundation for Mamba’s applications in computer vision. It not only resolves stability issues in large-scale model training but also provides new directions for long-sequence modeling and multimodal research, pushing the boundaries of performance in visual tasks.

Paper URL:https://arxiv.org/pdf/2405.18424

Code Repository :https://zqh0253.github.io/3DitScene

Scene image editing is crucial for entertainment, photography, and advertising design. Existing methods solely focus on either 2D individual object or 3D global scene editing. This results in a lack of a unified approach to effectively control and manipulate scenes at the 3D level with different levels of granularity.

Support object-level 3D editing for any given image

Scene image editing is critical for entertainment, photography, and advertising design. We propose 3DitScene, an innovative scene editing framework that enables seamless 2D-to-3D scene editing through language-guided disentangled Gaussian splatting.

Unlike traditional methods that focus solely on 2D or global 3D editing, 3DitScene provides a unified scene representation that supports both flexible adjustments to the entire scene and precise operations on individual objects, empowering users with strong control over scene composition and object editing. By embedding linguistic features extracted from CLIP into 3D geometry and optimizing scene representations using generative models (such as Stable Diffusion), 3DitScene generates high-quality 3D representations from single images while ensuring 3D consistency and rendering quality throughout the editing process.

Experimental results show that 3DitScene significantly outperforms existing methods in object manipulation (e.g., moving, rotating, deleting) and camera control, while also delivering strong performance in image consistency and detail quality. Notably, its support for object-level separation and scene layout enhancement effectively improves the visual quality of occluded regions and the overall editing effects.

Click for more details on Seed's ICLR 2025 accepted papers and other publicly available research!