ByteDance Seed Prover Achieves Silver Medal Score in IMO 2025

ByteDance Seed Prover Achieves Silver Medal Score in IMO 2025

Date

2025-07-23

Category

Team News

By official invitation, the ByteDance Seed team participated in IMO (The International Mathematical Olympiad) 2025, deploying our specialized formal mathematical reasoning model, Seed Prover. After three days of sustained effort, Seed Prover fully solved 4 out of 6 problems and partially solved 1, receiving an IMO-certified score of 30 points, equivalent to a silver medal performance (scores per problem: 2/7/7/7/7/0). Our subsequent extended search efforts resulted in the successful completion of proofs for the first five problems.

Seed Prover is constructed upon the Lean proof assistant and trained using multi-stage reinforcement learning. It guarantees the absolute reliability of mathematical proofs by facilitating interaction between natural language reasoning and formally verified code. Through extended chain-of-thought reasoning and computational scaling via multi-agent interaction, Seed Prover is capable of deeply analyzing individual mathematical problems continuously over several days.

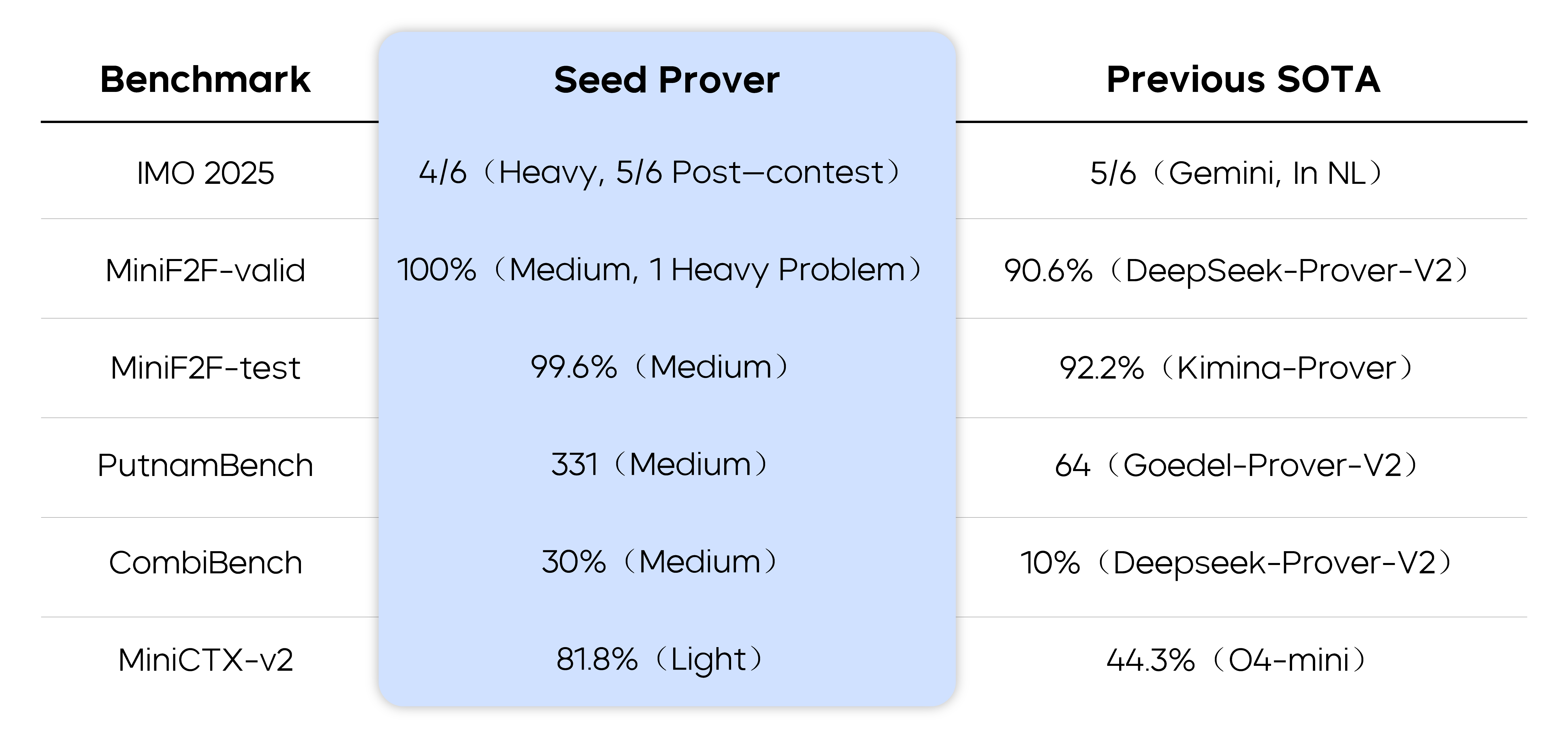

In addition to its outstanding performance at IMO, Seed Prover has achieved record-breaking outcomes on various benchmark tests for formal mathematical reasoning.

Problem-Solving Performance at IMO 2025

Seed Prover solved four IMO 2025 problems over three days. Prior to the proof attempts, problem statements were manually formalized to ensure accuracy. Seed Prover successfully solved Problem 2 (geometry) and Problem 3 (number theory) from Day 1, and Problem 4 (number theory) and Problem 5 (combinatorics/algebra) from Day 2. Post-competition, Seed Prover also managed to prove Problem 1 (combinatorics).

Detailed problem-solving breakdown:

-

Problem 1 (Combinatorics): Correct construction achieved during the competition; full proof completed post-competition through four additional days of search, totaling 4000 lines of formalized proof.

-

Problem 2 (Geometry): Proof automatically generated and validated by the Seed Geometry subsystem within two seconds.

-

Problem 3 (Number Theory): Three days of reasoning, resulting in 2000 lines of formalized proof.

-

Problem 4 (Number Theory): Similarly required three days, producing 4000 lines of formalized proof.

-

Problem 5 (Combinatorics/Algebra): Completed within one day; the proof differs from publicly available human-authored solutions.

Here is the formalized proof searched by Seed Prover:

P1:https://github.com/ByteDance-Seed/Seed-Prover/blob/main/SeedProver/imo2025/p1.lean

P2:https://github.com/ByteDance-Seed/Seed-Prover/blob/main/SeedProver/imo2025/p2_proof.pdf

P3:https://github.com/ByteDance-Seed/Seed-Prover/blob/main/SeedProver/imo2025/p3.lean

P4:https://github.com/ByteDance-Seed/Seed-Prover/blob/main/SeedProver/imo2025/p4.lean

P5:https://github.com/ByteDance-Seed/Seed-Prover/blob/main/SeedProver/imo2025/p5.lean

Test-time scaling for Seed Prover

Seed Prover’s effectiveness arises from multi-stage reinforcement learning, enhancing its long chain-of-thought reasoning ability for complex mathematical problems and enabling diversified interactions with Lean. Another critical factor lies in how Seed Prover expands its reasoning budget at test time; to this end, the team designed a multi-tiered test-time expansion strategy:

Lightweight

Previous work uses Pass@K to evaluate LLMs with long COT ability on ATP. We found that using Lean compiler feedback can significantly improve the proving performance, which can exceed the inference token budget for a single pass.

The lightweight inference setting is to iteratively refine proofs within eight iterations (combining Pass@8), completing typically within one hour. This mode enables solving problems at difficulty levels exemplified by IMO 2022 P2, which would otherwise require extensive computational resources (Pass@8192).

Medium

To prove a difficult competition-level problem, it may contain a complex proof. The medium inference setting includes external optimizations on original proofs and internal optimizations focused on challenging lemmas that the external optimizations fail. This provides enough thinking budget for each difficult lemma.

This setup allows solving more intricate problems, such as IMO 2003 P6 (MOHS difficulty = 35) and IMO 2025 P5, often producing proofs exceeding 1000 lines and requiring hours to days of computational effort.

Heavyweight

Medium inference setting permits Seed Prover to think deeply in every detail of the proof, but it cannot explore widely about the question to find interesting properties. Under the heavyweight inference setting, the system constructs a conjecture pool and a lemma pool. The conjecture pool is initialized by the given problem, and the lemma pool is initially empty.

During the inference, Seed Prover systematically tests, proves, or refutes conjectures and generates new ones as needed. After prolonged computation, a lemma pool containing thousands of mathematical facts is created, and the most valuable lemmas are leveraged to complete difficult proofs. IMO 2025 Problems 3 and 4 were solved under this setting.

Multi-Benchmark Test Results of Seed Prover

Seed Prover has been evaluated against multiple formal reasoning benchmarks, outperforming previous some state-of-the-art results:

Seed Prover has successfully proved 79% previously formalized IMO problems, and almost solved the MiniF2F benchmark. It further proves more than half of the Putnam benchmark, which is an Undergraduate-level Math Contest. For other benchmarks, including CombiBench and MiniCTX-v2, Seed Prover shows superior performance. These results suggest that Seed Prover is effective not only in high school competition-level mathematics but also across broader mathematical domains.

Results until July 22nd

Conclusion and Future Outlook

We would like to express our gratitude to the IMO Committee for inviting ByteDance Seed to participate, as well as for assisting in organizing the scoring process. The final score of Seed Prover in IMO 2025 disclosed in this article has been confirmed with the IMO Committee before its release.

Seed Prover's performance at IMO 2025 demonstrates the considerable potential of integrating large language models with formal verification methods. The computational expansion strategies employed have significantly enhanced system performance through in-depth and extensive reasoning. Recognizing that competition mathematics represents only a subset of mathematics as a whole, future work will aim to extend exploration into broader mathematical domains.