Seed3D 1.0 Released: Generate High-Fidelity 3D Models from Single Images, Featuring SOTA Texturing

Seed3D 1.0 Released: Generate High-Fidelity 3D Models from Single Images, Featuring SOTA Texturing

Date

2025-10-23

Category

Models

World simulators are crucial for the development of embodied AI. Ideally, they can provide complex scene simulations and high-quality synthetic data for robot training, while also supporting real-time interactive training environments.

However, current technologies still face bottlenecks: Video generation-based simulators can produce photorealistic visuals but lack physical interaction capabilities, while traditional graphics-based simulators offer accurate physical interactions but fall short in content diversity.

Today, the ByteDance Seed team rolls out Seed3D 1.0, a 3D generative large model capable of end-to-end generation of high-fidelity, simulation-ready 3D models from single images. Built on an innovative Diffusion Transformer (DiT) architecture and trained on large-scale datasets, Seed3D 1.0 can generate complete 3D models with detailed geometry, photorealistic textures, and physically based rendering (PBR) materials.

3D models generated by Seed3D 1.0 can be integrated into simulation environments for robot training.

3D models generated by Seed3D 1.0 can be seamlessly imported into simulation engines such as Isaac Sim. With minimal adaptations, they are ready for the training of large embodied-AI models. Furthermore, through its step-by-step scene generation, Seed3D 1.0 can scale from generating single objects to constructing complete 3D scenes.

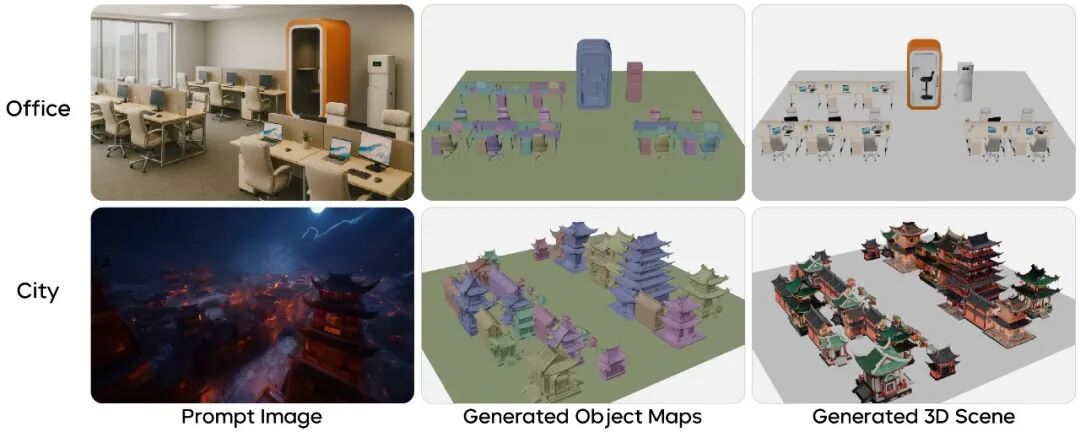

Seed3D 1.0 can generate 3D scenes featuring ancient architectural complexes from images

In comparative benchmarking against existing 3D generative models, Seed3D 1.0 demonstrates distinct advantages: Its performance in texture and material generation surpasses that of previous open-source and proprietary models, while its geometry generation performance exceeds that of industry models with larger parameter scales, achieving industry-leading comprehensive capabilities.

The technical report for Seed3D 1.0 is now publicly available, and the API has been released. Visit our project homepage to explore the capabilities, and feel free to try it out and share your feedback.

Project Homepage:

https://seed.bytedance.com/seed3d

Try Now:

https://console.volcengine.com/ark/region:ark+cn-beijing/experience/vision?modelId=doubao-seed3d-1-0-250928&tab=Gen3D

1. Seed3D 1.0: End-to-End High-Fidelity 3D Content Generation

Driven by the need for physical accuracy, Seed3D 1.0 is developed based on a graphics-centric world simulator approach. It aims to overcome the challenges inherent to this approach, including limited diversity in 3D models, high costs of manual creation, and lengthy development cycles.

During the R&D process of Seed3D 1.0, the team gathered and processed large-scale, high-quality 3D data to train a DiT-based, scalable 3D generative foundation model. Additionally, Seed3D 1.0 adopts an end-to-end technical pipeline, enabling rapid generation of simulation-ready 3D models from single images.

Data Construction

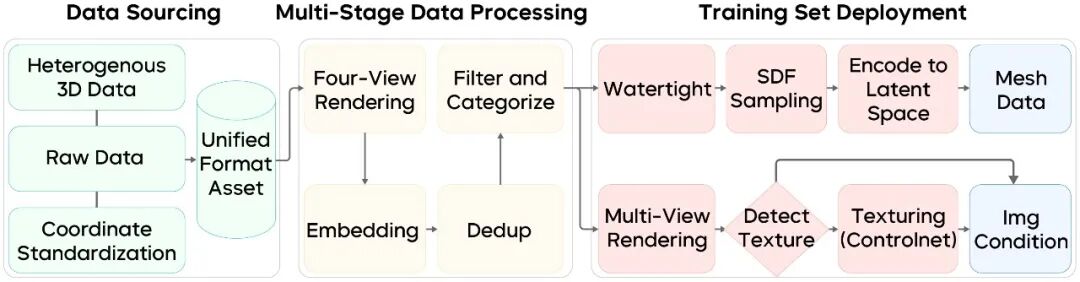

The primary challenge during training lies in the complexity and diversity of 3D data. As illustrated below, the team established a comprehensive three-stage data processing pipeline for Seed3D 1.0 to transform massive, heterogeneous raw 3D data into high-quality training datasets.

The processing workflow consists of three key steps: (1) the standardization of the raw data's coordinate systems and file formats; (2) preprocessing, involving deduplication, pose normalization, and category annotation; and (3) the generation of standardized training data through geometric surface reconstruction and multi-view image rendering.

The team also developed an efficient distributed data processing framework that supports the storage, indexing, and visualization of large-scale data. Furthermore, a stable training infrastructure was built to ensure the reliability of large-scale diffusion model training.

Model Architecture

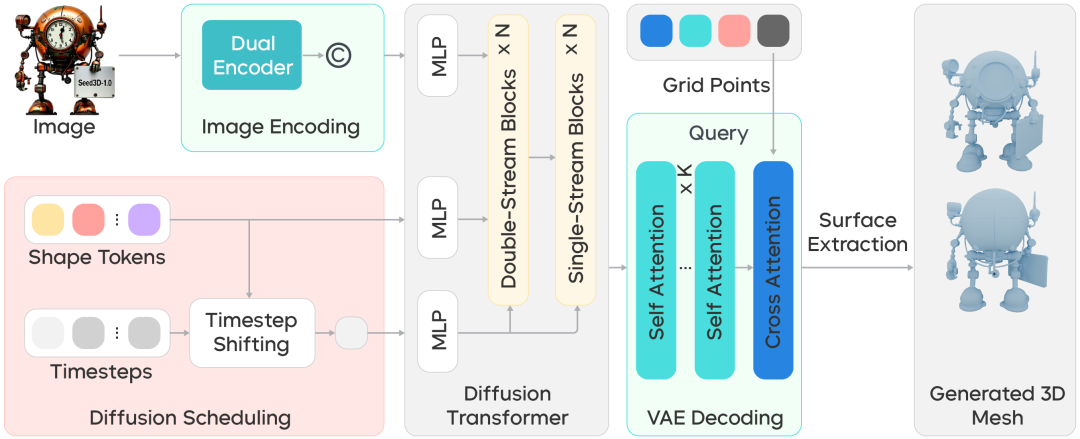

Seed3D 1.0 employs DiT, an architecture prevalent in generative AI, to design models for 3D geometry generation and texture mapping.

- High-Fidelity Geometry Generation

Seed3D 1.0 enables high-fidelity construction of 3D geometry, delivering both accurate structural details and ensuring physical integrity—such as closed surfaces and manifold geometry—to meet simulation requirements. As shown in the figure below, Seed3D 1.0 introduces two core modules for geometry generation:

(1) VAE Encoder: Learns compact representations of 3D geometry, efficiently handling complex mesh structures while preserving surface details.

(2) DiT Model: Generates high-quality 3D geometry in the latent space based on input images.

This architecture enhances generation efficiency while ensuring geometric accuracy and physical integrity.

- Multi-View Texture Generation

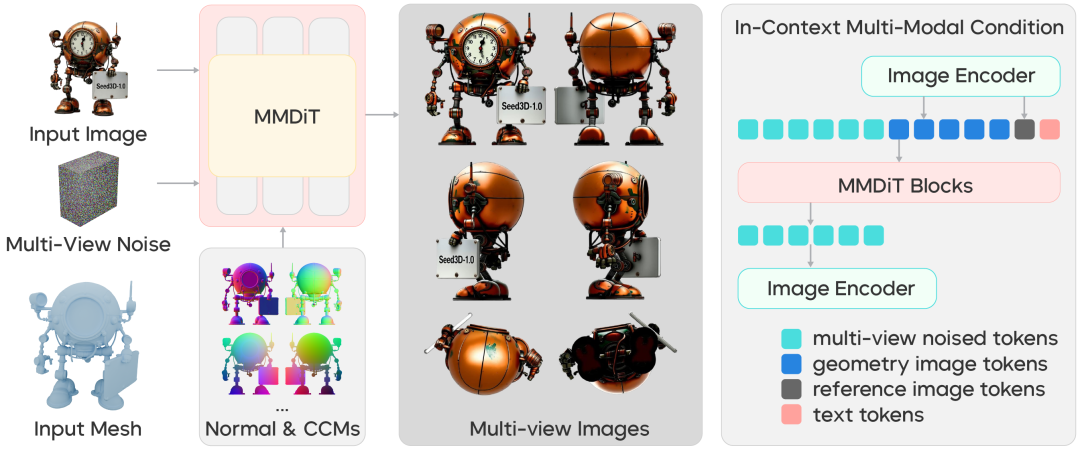

In addition to detailed geometric structures, texture mapping is also crucial for ensuring the visual quality and diversity of the generated 3D content. As illustrated below, Seed3D 1.0 has introduced a multi-view image generation model based on a multimodal DiT (MMDiT) architecture. The model takes a reference image and 3D geometry renderings as input and outputs texture images that are consistent across multiple views.

The core innovation lies in the in-context multimodal conditioning strategy and the optimized positional encoding mechanism, which ensures consistency across different views. To overcome the challenge of increased sequence length in multi-view generation, the team employed shifted timestep sampling to maintain generation quality.

- PBR Material Generation

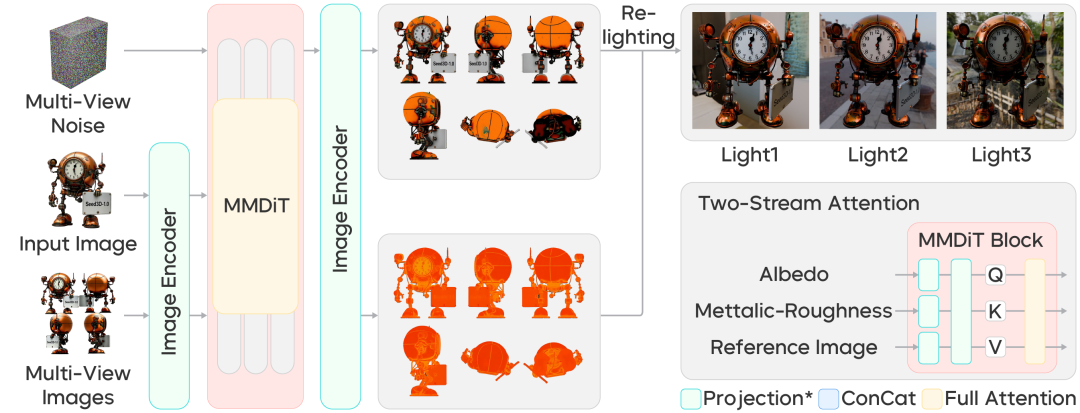

High-quality materials are essential for photorealistic 3D rendering. PBR materials consist of three components—albedo, metalness, and roughness—which dictate an object's visual realism. Current PBR synthesis methods mainly fall into two categories: generation-based methods that synthesize PBR maps from reference images and 3D geometry, and estimation-based methods that decompose multi-view images directly into material components. Due to limited high-quality PBR training data, generation-based methods often produce less realistic results compared to estimation-based methods.

Based on this consideration, Seed3D 1.0 adopts the estimation paradigm. Unlike existing methods, the team proposed a PBR generative model based on a DiT network, which ensures efficient generation while improving the accuracy of material estimation and exhibits stronger generalization capabilities with limited data availability.

As shown in the figure, Seed3D 1.0 learns material decomposition directly from multi-view images. It not only accurately decomposes the images into various material components but also generates PBR materials that exhibit realistic visual effects under different lighting conditions.

2. Evaluation Results: Seed3D 1.0 Excels in Reference Consistency, Accurately Reconstructing Fine Details

To validate the generation quality of Seed3D 1.0, the team conducted an extensive comparative evaluation focused on two core tasks: geometry generation and material/texture generation. The model's performance was evaluated multidimensionally through quantitative benchmarking, qualitative analysis, and human evaluation.

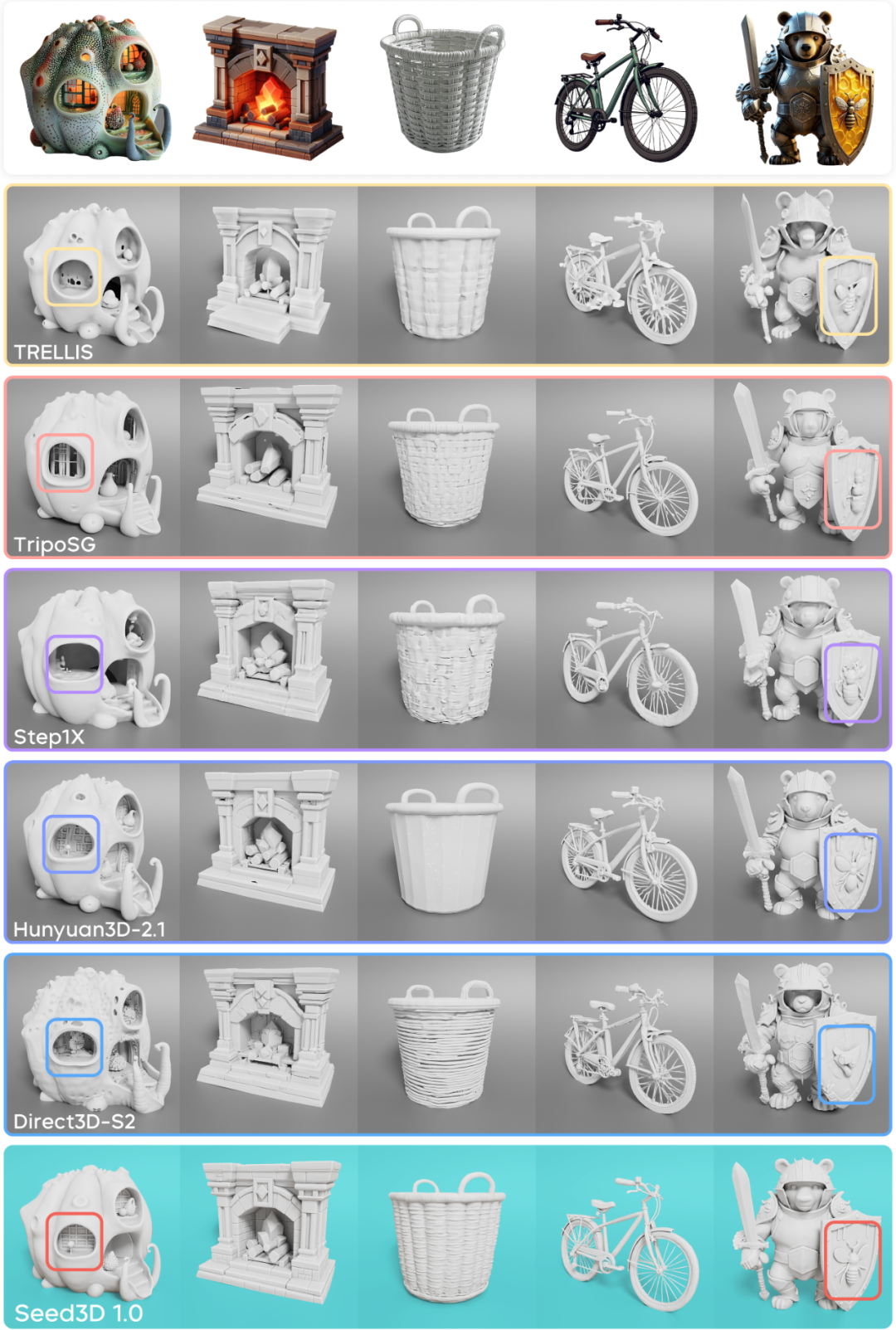

Geometry Generation: Fewer Parameters, Superior Results

As shown in the figure above, Seed3D 1.0 outperforms competing models in its ability to preserve details and maintain structural integrity. With 1.5B parameters, Seed3D 1.0 surpasses the performance of a comparable 3B-parameter model (Hunyuan3D-2.1), enabling more accurate reconstruction of fine features in complex objects.

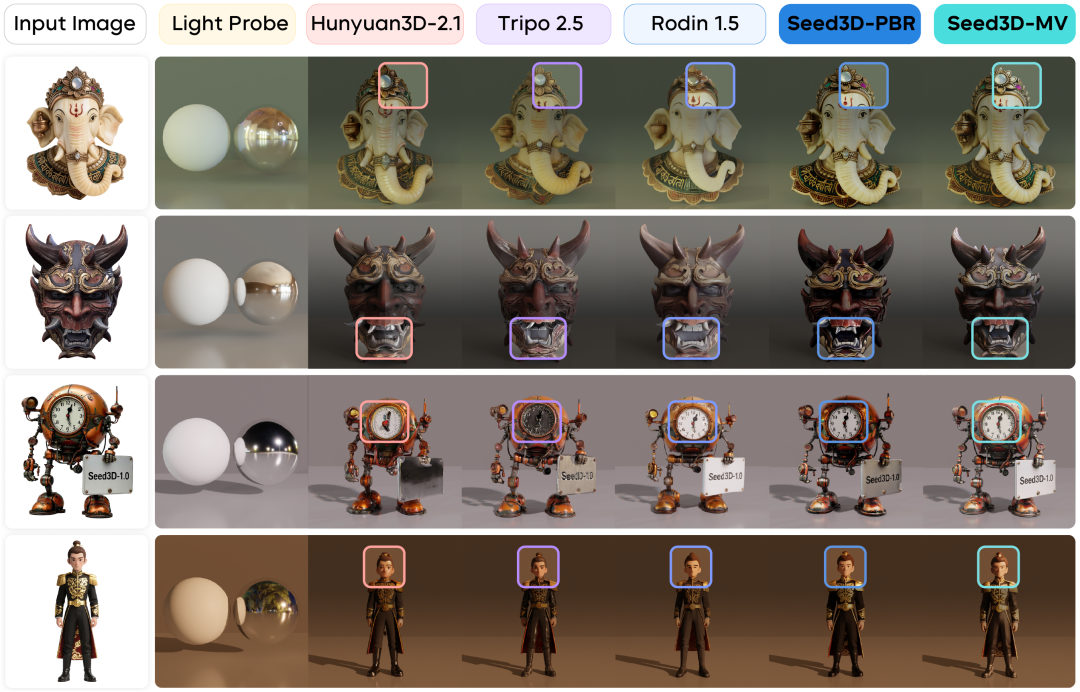

Texture/Material Generation: Excellence in Image Consistency

Qualitative comparisons visually demonstrate Seed3D 1.0's advantage in texture generation: It exhibits excellent performance in maintaining reference image consistency, with a particularly notable edge in generating fine text. Taking a steampunk clock as an example, while other models produce blurred details, Seed3D 1.0 maintains sharp clarity for fine textual elements like numbers on the clock face and mechanical components. In character generation, Seed3D 1.0 accurately reconstructs facial features and fabric textures, whereas baseline models tend to lose reference consistency. Furthermore, the PBR materials generated by Seed3D 1.0 exhibit stronger surface realism, enabling appropriate metallic reflectance and skin subsurface scattering, resulting in natural appearances even under intense lighting conditions.

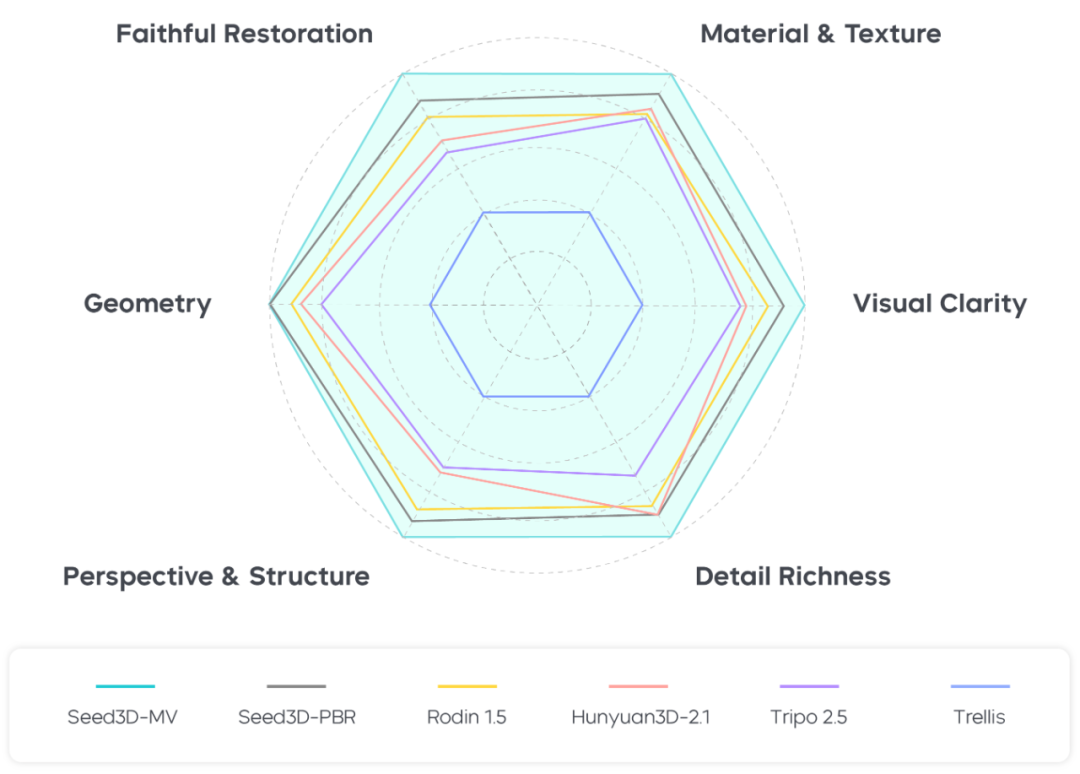

Human Evaluation: Outstanding Performance in Geometry and Material/Texture Generation

To comprehensively evaluate the generation results, the team also invited 14 human evaluators to perform a multidimensional assessment of the generation quality from different models based on 43 input images. Evaluation dimensions included visual clarity, faithful restoration, geometry quality, perspective & structure accuracy, material & texture realism, and detail richness. The results show that Seed3D 1.0 received consistently higher ratings across all dimensions.

In geometry generation, Seed3D 1.0 outperformed other baseline models in both geometric quality and perspective & structure accuracy. Furthermore, it excels in material and texture generation, demonstrating significant advantages in reference image consistency, visual clarity, and detail richness, thereby achieving state-of-the-art (SOTA) performance.

3. From Single Objects to Complete Scenes: Powering World Simulators with Generated Content

Leveraging Seed3D 1.0's high-quality generation capabilities, the team has extended its application to two key scenarios.

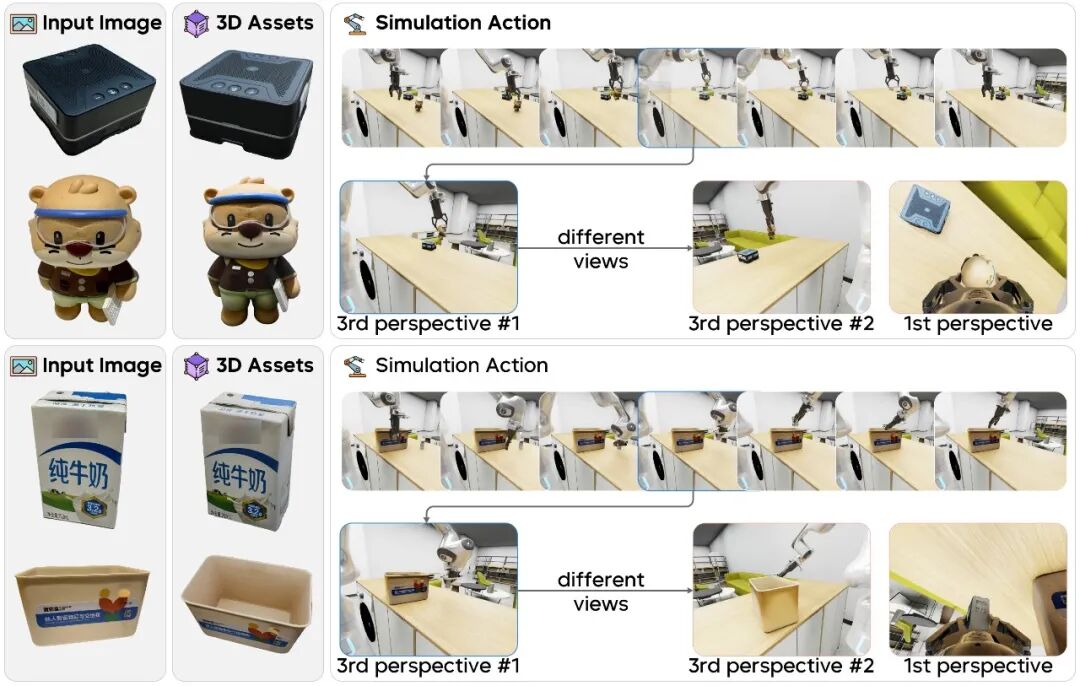

Simulation-Ready 3D Model Generation

As shown in the figure below, Seed3D 1.0 can generate 3D models directly from single input images. These models are ready for direct integration into Isaac Sim, enabling physics-based simulation and robotic manipulation testing.

To import 3D models into the simulator, Seed3D 1.0 utilizes a visual-language model (VLM) to estimate the scale of each model and adjusts the models to align with real-world physical dimensions. Isaac Sim can automatically generate collision meshes from watertight manifold geometry and apply default physical properties such as friction coefficients, enabling immediate physics simulation without manual tuning.

The team conducted robotic manipulation experiments such as grasping and multi-object interactions within Isaac Sim. The physics engine provides real-time feedback on contact forces, object dynamics, and manipulation outcomes. Models generated by Seed3D 1.0 preserve fine geometric details essential for realistic contact simulation—for example, toys and electronic devices maintain accurate surface features crucial for grasp planning.

These simulation environments offer three key benefits for embodied AI development:

- Scalable generation of training data through diverse manipulation scenarios.

- Interactive learning via real-time feedback on the physical consequences of robot actions.

- Diverse multi-view, multimodal observation data that enables comprehensive evaluation benchmarks for vision-language-action (VLA) models.

Complete 3D Scene Generation

Adopting a step-by-step generation strategy, Seed3D 1.0 can also scale from generating single objects to constructing complete, coherent 3D scenes.

As illustrated below, the system first uses a VLM to extract information about objects and spatial relationships from the input image, constructing a scene layout. It then generates corresponding 3D models for each object. Finally, it assembles the individual objects into a complete scene based on the spatial layout. This framework enables Seed3D 1.0 to generate a wide variety of 3D environments, from office spaces to urban streetscapes, providing scene content support for world simulators.

4. Summary and Outlook

Although Seed3D 1.0 has demonstrated promising performance in 3D model and scene generation, the team is also aware of the remaining challenges in building a world model from a 3D generative large model, such as the need to further enhance generation accuracy and generalization. Large-scale, automated, high-quality 3D scene generation remains in the early stages of exploration.

Moving forward, the team plans to experiment with incorporating multimodal large language models (MLLMs) to enhance the quality and robustness of 3D generation, and to drive the large-scale application of 3D generative models within world simulators.