Seed Research

Explore the breakthrough research and

recent papers from our team

recent papers from our team

Blog

Seed1.8

Seedance 1.5 pro

Seed Prover 1.5

GR-RL

Depth Anything 3

Official Release of Seed1.8: A Generalized Agentic Model

An all-in-one integration of search, coding, and GUI Agent capabilities.

Dec 18, 2025

Publications

Dec 15, 2025

Seedance 1.5 pro: A Native Audio-Visual Joint Generation Foundation Model

Dec 2, 2025

GR-RL: Going Dexterous and Precise for Long-Horizon Robotic Manipulation

Oct 22, 2025

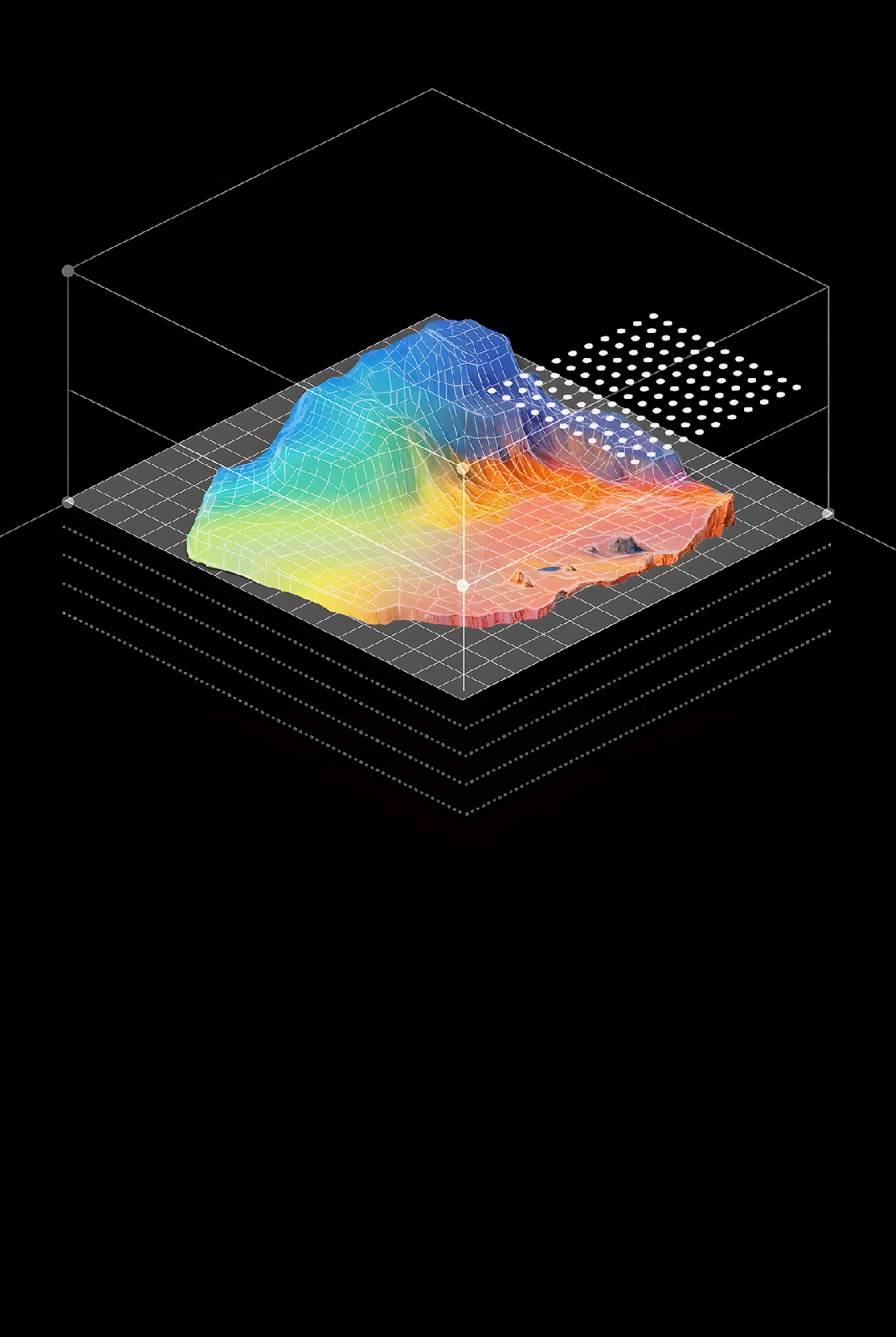

Seed3D 1.0: From Images to High-Fidelity Simulation-Ready 3D Assets

Sep 2, 2025

PXDesign: Fast, Modular, and Accurate De Novo Design of Protein Binders

Jul 31, 2025

Seed-Prover: Deep and Broad Reasoning for Automated Theorem Proving

Mar 14, 2025

Deep Learning Sheds Light on Integer and Fractional Topological Insulators