概述

Seed2.0 系列模型正式发布, 提供 Pro、Lite、Mini 三款不同尺寸的通用 Agent 模型。

该系列通用模型的多模态理解能力实现全面升级,并强化了 LLM 与 Agent 能力,使模型在真实长链路任务中能够稳定推进。

Seed2.0 还进一步把能力边界从竞赛级推理扩展到研究级任务,在高经济价值与科研价值任务评测中达到业界第一梯队水平。

Seed2.0 Pro

侧重长链路推理能力与复杂任务稳定性

适配真实业务中的复杂场景

Seed2.0 Lite

兼顾生成质量与响应速度

适合作为通用生产级模型

Seed2.0 Mini

侧重推理吞吐与部署密度

面向高并发与批量生成场景

模型表现

Seed2.0 在视觉推理与感知上有着显著提升,在 MathVision 等基准测试中达到SOTA水平。

面对动态场景,Seed2.0 强化了对时间序列与运动感知的理解能力,在 MotionBench 等关键测评中处于领先位置。

Seed2.0 还重点强化了指令遵循能力,并在复杂Agent能力评估中,达到业界第一梯队水平。

多模态视觉理解与交互应用

Seed2.0 可以处理复杂视觉输入,并完成实时交互和应用生成。无论是从图像中提取结构化信息,还是通过视觉输入生成交互式内容,Seed2.0 都能高效、稳定地完成任务。

Please enter Chat

自主还原布局与视觉细节,并生成可运行的前端代码(支持动效与交互扩展)。

Please enter Chat

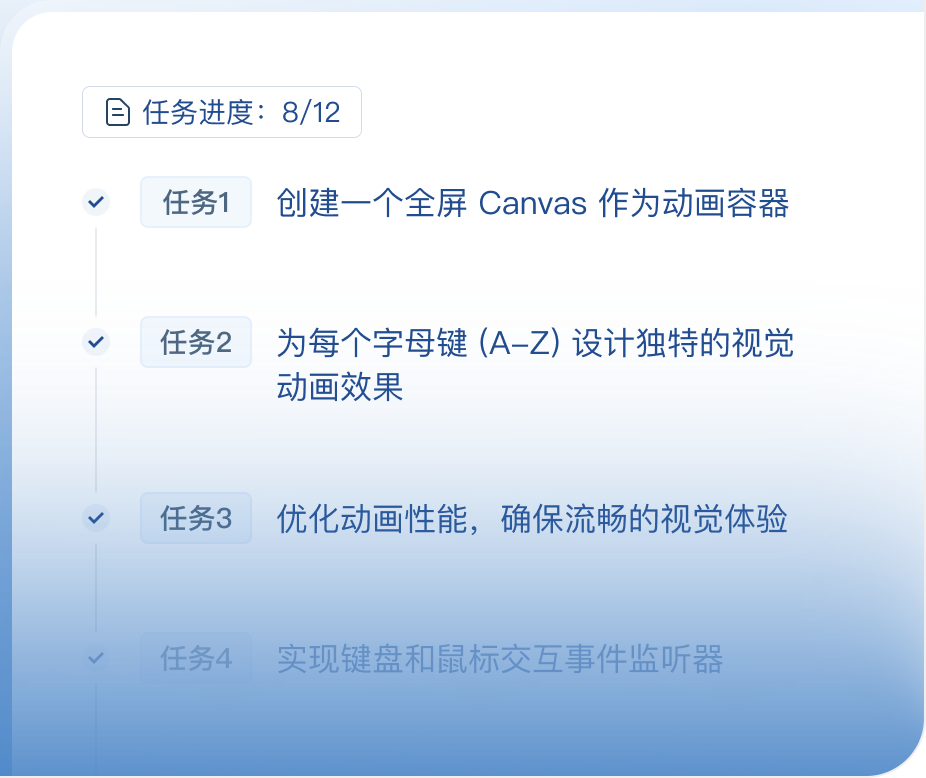

专业复杂任务稳定推进

Seed2.0 大幅强化了LLM与Agent表现,在长链路、多步骤指令的任务中稳定且可靠。

Workflow gym - FreeCAD 双凸台建模体积/表面积读取任务

基于 FreeCAD Part Design 工作台,完成双凸台全流程建模与几何参数提取

Workflow gym - FreeCAD 双凸台建模体积/表面积读取任务

评测结果

Seed2.0 在 LLM、VLM、Agent 领域的任务评估中较 Seed1.8 全面提升,尤其在推理、复杂指令执行和多模态理解方面表现突出。

右滑浏览全部模型结果。

右滑浏览全部模型结果。

Capability

Benchmark

Seed2.0 pro

Seed2.0 lite

Seed2.0 mini

GPT-5.2 High

GPT-5 mini High

Claude Sonnet 4.5

Claude Opus 4.5

Gemini 3 Pro High

Gemini 3 Flash High

Capability

Benchmark

Seed2.0 pro

Seed2.0 lite

Seed2.0 mini

GPT-5.2 High

Seed1.8

-

Claude Opus 4.5

Gemini 3 Pro High

-

Capability

Benchmark

Seed2.0 pro

Seed2.0 lite

Seed2.0 mini

Seed1.8

Gemini 3 Pro High

Gemini 3 Flash High

Human

-

-

Capability

Benchmark

Seed2.0 pro

Seed2.0 lite

GPT-5 mini High

GPT-5.2 High

Claude Sonnet 4.5

Claude Opus 4.5

Gemini 3 Pro High

Gemini 3 Flash High

-

Capability

Benchmark

Seed2.0 pro

Seed2.0 lite

Seed2.0 mini

GPT-5.2 High

GPT-5 mini High

Claude Sonnet 4.5

Claude Opus 4.5

Gemini 3 Pro High

Gemini 3 Flash High

Science

MMLU-Pro

87

87.7

83.6

85.9

84.1

88

89.3

90.1

87.8

HLE (no tool, text only)

32.4

28.2

13.3

29.9

17.6

14.5

23.7

33.3

31.7

SimpleQA Verified

36

24

18.9

36.8

26

29.3

48.6

72.1

65.4

HealthBench

57.7

51.2

30

39.4

62.5

28.7

36.3

37.9

51.6

HealthBench - Hard

29.1

20

15.3

36.6

38.6

10.9

11

15

21.5

SuperGPQA

68.7

67.5

61.6

67.9

60.5

65.5

70.6

73.8

72.7

LPFQA

52.6

50.9

47.2

54.4

50.7

54.9

52.6

51.2

51.6

Encyclo-K

65.7

64.5

52.1

61

53

58

63.3

64.9

60

Math

AIME 2026

94.2

88.3

86.7

93.3

92.5

82.5

92.5

93.3

93.3

AIME 2025

98.3

93

87

99

90.3

87

91.3

95

95.2

HMMT Feb 2025

97.3

90

70

100

93.3

79.2

92.9

97.3

100

HMMT Nov 2025

93.3

86.7

80

100

96.7

81.7

93.3

93.3

96.7

MathArenaApex

20.3

4.7

4.2

18.2

2.1

1

1.6

24.5

17.7

MathArenaApex (shortlist)

82.1

52.6

31.1

80.1

43.4

26

47.4

71.4

71.9

BeyondAIME

86.5

76

69

86

72

57

69

83

82

IMOAnswerBench (no tool)

89.3

81.6

71.6

86.6

72.1

60.7

72.6

83.3

84.4

Code

Codeforces

3020

2233

1644

3148

1985

1485

1701

2726

2727

AetherCode

60.6

41.5

29.8

73.8

42.6

16.4

31.6

57.8

56.1

LiveCodeBench (v6)

87.8

81.7

64.1

87.7

62.6

64

84.8

90.7

84.7

STEM

GPQA Diamond

88.9

85.1

79

92.4

82.1

84.3

86.9

91.9

90.7

Superchem (text-only)

51.6

48

16.2

58

34.8

32.4

43.2

63.2

54.4

BABE

50

50.2

40.4

58.1

49.2

44.7

49.3

51.3

55.2

Phybench

74

73

56

74

60

48

69

80

77

FrontierSci-research

25

18.3

3.3

25

18.3

16.7

21.7

15

11.7

FrontierSci-olympiad

74

70

44

75

69

60

71

73

73

General Reasoning

ARC-AGI-1

85.4

75.7

43.3

89.9

54.5

70.9

84

85

86.9

ARC-AGI-2

37.5

14.8

2.3

57.5

3.5

13.6

29.1

31.1

34.3

KORBench

77.5

77

72.8

79.2

74.2

73

77.4

73.9

76

ProcBench

96.6

92.4

80.1

95

87.5

87.5

92.5

90

90

Long Context Performance

MRCR v2 (8-needle)

54

33.6

51.4

89.4

50.1

47.1

56.2

79.7

79

Graphwalks Bfs (<128k)

68.9

82.5

64.1

98

85.5

80.5

92

79.9

84.2

Graphwalks Parents (<128k)

97.6

100

93

99.7

96.6

99

96.2

99.7

99.7

LongBench v2 (128k)

63.8

59.6

52.3

63.2

56.7

62

65

67.4

64

Frames

84.5

83.4

80.5

84

82.9

78.7

84.7

81.9

83.7

DeR2 Bench

58.2

57.3

46.6

69

50.3

58.9

60.4

66.1

66

CL-Bench

20.8

20

14.8

23.9

25.2

18.1

22.6

15.6

16.1

Multilingual

Global PIQA

92.3

92.1

89.2

93.2

91.6

93.9

93.9

95

95.6

MMMLU

88.1

87.7

81.6

90.3

86.3

89.9

91

91.8

91.8

Disco-X

82

80.3

73

76.3

67.7

70.3

78.6

76.8

71.9

Instruction Following

MultiChallenge

68.3

63.2

61.1

59.5

59

57.3

59

68.7

69.3

COLLIE

93.9

94

91.2

96.9

97.4

77.3

79.8

95

96.5

MARS-Bench

85.6

80.5

62.4

87.9

66.1

72.9

87.7

85.6

84.6

Inverse IFEval

78.9

77.1

69.3

72.3

74.8

69.3

72.4

79.6

80.9

Hallucination

LongFact-Objects

92.9

92.2

87

99.2

99.2

98.8

99

98.1

97.9

LongFact-Concepts

92.8

92.4

91.4

99.7

99.5

98.5

98.8

98.5

98.6

FactScore

71.2

62.4

50.4

91.9

96.1

90.6

91.1

92.6

92

Capability

Benchmark

Seed2.0 pro

Seed2.0 lite

Seed2.0 mini

GPT-5.2 High

Seed1.8

-

Claude Opus 4.5

Gemini 3 Pro High

-

Multimodal Math

MathVista

89.8

89

85.5

83.1

87.7

-

80.6

89.8

-

MathVision

88.8

86.4

78.1

86.8

81.3

-

74.3

86.1

-

DynaMath

68.9

70.5

58.9

70.1

61.5

-

52.5

63.3

-

MathKangaroo

90.5

86.3

79.8

86.9

73.8

-

69.6

84.4*

-

MathCanvas

61.9

61.1

53.2

55.3

53.6

-

52.9

58.8

-

Multimodal STEM

MMMU

85.4

83.7

79.7

83.7

83.4

-

81.6

87

-

MMMU-Pro

78.2

76

71.4

79.5*

73.2

-

70.8

81.0*

-

EMMA

72

65.5

57

69.4

60.9

-

60.4

66.5

-

SFE

55.6

53.4

48.4

50.1

51.2

-

55.8

61.9

-

HiPhO

74.1

72.5

55.8

77.7

58.3

-

81.8

79.1

-

XLRS-Bench (macro)

54.6

53.7

49.9

49.9

39.9

-

50.4

51.7

-

PhyX (openended)

72.1

62.8

65

71.5

65.9

-

61.3

71

-

Visual Puzzles

LogicVista

81.9

79.6

73.8

81

78.3

-

68.9

80.8

-

VPCT

76

73

48

56

61

-

29

90

-

ZeroBench (main)

12

8

7

11

11

-

4

10

-

ZeroBench (sub)

47.6

42.2

36.2

38.9

37.7

-

30.8

42.2

-

ArcAGI1-Image

88.8

80.9

29.8

93.1

31.4

-

75.8

69.4

-

ArcAGI2-Image

43.3

28.3

1.5

54.4

1.3

-

26.1

21.5

-

VisuLogic

47.4

47.3

40.4

37

35.8

-

27.6

39

-

Perception & Recognition

VLMsAreBiased

77.4

74.8

58.4

28

62

-

21.4

50.6*

-

VLMsAreBlind

98.6

97

93.1

84.2

93

-

77.2

97.5

-

VisFactor

36.8

33.4

23.6

33.6

20.4

-

24.5

45.8

-

RealWorldQA

86

81.7

81.6

82.1

78

-

75.9

84.7

-

BabyVision

60.6

57.5

38.7

37.4

30.2

-

16.2

49.7*

-

General VQA

SimpleVQA

71.4

67.2

68.7

54.1

65.4

-

57.9

69.7

-

HallusionBench

68

66

65.1

67.7

63.9

-

65.3

69.9

-

MME-CC

57

50.2

40.8

44.4

43.4

-

25.2

56.9

-

MMStar

83

80.7

79.1

78.2

79.9

-

73.9

83.1

-

MUIRBench

81.8

76.2

78

77.4

78.7

-

78.9

78.2

-

MTVQA

51.1

51.1

50.6

48.5

47.3

-

53.1

50.8

-

WorldVQA

49.9

44

47.6

26.3

40.4

-

36.6

47.5

-

VibeEval

81.4

76.5

76.5

73.1

74

-

70.3

77.7

-

ViVerBench

75.9

80

73.9

74.8

74.6

-

72.4

75.9

-

Pointing & Counting

CountBench

95.5

97.1

95.5

91.2

96.3

-

90.3

97.3

-

FSC-147↓

11.3

11.9

17.3

21.1

13.6

-

20.9

12.1

-

Point-Bench

81.4

79

77

-

76.5

-

-

85.5*

-

2D & 3D Spatial Understanding

BLINK

79.5

75.6

73.4

70.3

74.3

-

68.1

77.1

-

MMSIBench (circular)

32.5

28.3

19.7

26.1

25.8

-

20.2

25.4

-

TreeBench

64.7

64.2

57.3

58.8

58.5

-

53.6

62.7

-

RefSpatialBench

72.6

66.4

55.6

25.5

56.3

-

-

65.5*

-

DA-2K

92.3

90.3

86.4

78.9

90.7

-

70.3

82.1

-

All-Angles

72.1

65.2

61.3

71.5

61.6

-

63.1

73.5

-

ERQA

68.5

65.8

56.3

59.8

58.8

-

48.3

70.5*

-

Document & Chart Understanding

ChartQAPro

71.2

70.3

65.2

67.6

63

-

-

69

-

OCRBenchv2

62.5

62.4

58.5

55.6

52.6

-

55.5

63.3

-

OmniDocBench 1.5 ↓

0.099

0.102

0.11

0.143*

0.106

-

0.153

0.115*

-

CharXiv-DQ

93.5

93.3

91.9

93.8

88

-

92.7

94.4

-

CharXiv-RQ

80.5

79.9

70.8

82.1*

71.4

-

65.5

81.4*

-

Multimodal LongContext Understanding

DUDE

72.4

72.1

68.9

68.2

69.4

-

55.6

70.1

-

MMLongBench

74.8

70.8

66.7

-

72.4

-

-

73.6

-

LongDocURL

74.7

75.1

71.3

-

74.5

-

-

72

-

MMLongBench-Doc

61.4

55.1

48.9

-

57

-

-

59.5

-

Capability

Benchmark

Seed2.0 pro

Seed2.0 lite

Seed2.0 mini

Seed1.8

Gemini 3 Pro High

Gemini 3 Flash High

Human

-

-

Video Knowledge

VideoMMMU

86.9

84.1

80.6

82.7

87.6*

88.1

74.4

-

-

MMVU

78.2

75

69

73.1

76.3

77.9

49.7

-

-

VideoSimpleQA

71.9

66.6

67.7

67.8

71.9

70.7

-

-

-

Video Reasoning

VideoReasonBench

77.8

64.2

40.5

52.8

59.5

61.2

73.8

-

-

Morse-500

37.4

32.2

32.2

29.2

33

32.4

55.4

-

-

VideoHolmes‡

67.4

63.8

58.6

65.5

64.2

65.6

-

-

-

Minerva‡

66.5

63.8

54.7

62.4

65

64.4

-

-

-

Motion & Perception

TVBench

75

71.5

70.5

71.5

71.1

69.6

94.8

-

-

ContPhy

67.4

56.1

55.9

54.9

58

60.5

-

-

-

TempCompass

89.6

87

83.7

86.9

88

88.3

97.3

-

-

EgoTempo

71.8

61.8

67.2

67

65.4

58.4

63.2

-

-

MotionBench

75.2

70.9

64.4

70.6

70.3*

68.9

-

-

-

TOMATO

59.9

57.3

47.4

60.8

55.8

60.8

95.2

-

-

+ Thinking with Tracking

65.3

59.2

51.3

61

-

64

95.2

-

-

Long Video

VideoMME‡

89.5

87.7

81.2

87.8

88.4*

85.2

-

-

-

CGBench

65

59.3

59.2

62.4

65.5

65.3

-

-

-

LongVideoBench

80.3

77.3

74.8

77.4

76.7

74.5

-

-

-

VideoEval-Pro

48

44.3

43.7

45.9

52.7

51.9

-

-

-

LVBench

76.4

73

66.6

73

-

-

-

-

-

Multi Video

CrossVid

60.3

57.7

58.6

57.3

53

48.7

89.2

-

-

Streaming Video

OVBench

69.2

65.5

60.1

65.1

62.7

59.2

-

-

-

LiveSports-3K

78

77.8

73.3

77.5

74.5

71.5

-

-

-

OVOBench

77

76.7

70.4

72.6

70.1

68.7

92.8

-

-

ODVBench

72.5

69.6

65.1

63.5

63.6

56.7

91.4

-

-

ViSpeak

78.5

84

77.5

79

89

86

96

-

-

Capability

Benchmark

Seed2.0 pro

Seed2.0 lite

GPT-5 mini High

GPT-5.2 High

Claude Sonnet 4.5

Claude Opus 4.5

Gemini 3 Pro High

Gemini 3 Flash High

-

Coding Agent

Terminal Bench 2.01

55.8

45

36.9

62.4

45.2

60.2

56.9

60

-

SWE-Lancer

49.4

47.1

43.1

48.9

45.7

56.1

44.3

51.7

-

SWE Bench Verified

76.5

73.5

67.9

80

77.2

80.9

76.2

78

-

Multi-SWE-Bench

45.2

41.1

49.3

47.7

47.7

52.8

50.2

59

-

SWE-Bench Pro

46.9

46

51.7

55.6

48.4

55.4

49.7

46.7

-

SWE Multilingual

71.7

64.4

63.8

68.8

64.1

74

72.7

71.1

-

Scicode

48.5

52.4

40.2

49.7

47.9

52.8

57.7

55

-

SWE-Evo

8.5

10.6

10.4

12.5

16.7

27.1

8.9

12.8

-

Aider Polyglot

80

76

79.1

91.1

82.2

92.4

94.2

92

-

ArtifactsBench

66.6

62.6

68.9

71.1

59.1

68.5

58.4

52.5

-

CodeSimpleQA

58

53.1

57.6

62.3

59.6

63

54.7

53.7

-

SpreadsheetBench Verified

79.1

82.3

58.1

69.9

75.9

78.6

70.8

65.7

-

Search Agent

BrowseComp

77.3

72.1

48.1

77.9(65.3)

43.9(29.5)

67.8(57.2)

59.2

41.5

-

BrowseComp-zh

82.4

82

49.5

76.1

42.4

62.4

66.8

63

-

HLE-text

54.2

49.5

35.8

45.5

32

43.2

46.9

47.6

-

HLE-Verified

73.6

70.7

56.4

68.5

37.6

56.6

67.5

71.8

-

WideSearch

74.7

74.5

37.7

76.8

65.1

76.2(71.7)

67.3

64

-

FinSearchComp

70.2

65.1

38.1

73.8

58.6

66.2

52.7

54.8

-

DeepSearchQA

77.4

67.7

16.7

71.3(66.4)

36.3

(76.1)41.6

63.9

54.7

-

Seal-0

49.5

52.3

34.2

51.4

53.4

47.7

45.5

37.8

-

Tool Use

τ2-Bench (retail)

90.4

90.9

-

82

86.2

88.9

85.3

88.6

-

τ2-Bench (telecom)

94.2

92.1

-

98.7

98

98.2

98

94.7

-

MCP-Mark

54.7

46.7

30.2

57.5

32.1

42.3

53.9

40.5

-

BFCL-v4

73.4

72.9

57.9

65.9

72.9

76.5

71

65

-

VitaBench

47

41.8

20.8

41.8

40.8

55.3

48.8

46.7

-

Deep Research

DeepConsult

61.1

60.3

49.8

54.3

55.8

61

48

26

-

DeepResearchBench

53.3

54.4

50.7

52.2

47.2

50.6

49.6

46.1

-

ResearchRubrics

50.7

50.8

43.6

42.3

38.6

45

37.7

36.9

-

Vision Agent

Minedojo Verified

49

39.7

20.3

18.3

-

-

23.3

24.3

-

MM-BrowseComp

48.8

45.1

17.9

26.3

-

-

25

22.8

-

HLE-VL

39.2

35.8

18.7

31

-

-

36

36.8

-

Science Discovery

Scicode

52.1

52.4

40.2

49.7

47.9

52.8

57.7

55

-

FrontierSci-research

23.3

18.3

18.3

25

16.7

21.7

15

11.7

-

Superchem (text-only)

53

48

34.8

58

32.4

43.2

63.2

54.4

-

BIObench

53.5

50.2

49.2

58.1

44.7

49.3

51.3

55.2

-

AInstein Bench

47.7

38.3

35

41.3

33.7

44

42.8

44

-

Vibe Coding

NL2Repo-Bench

27.9

24.6

19.5

49.3

39.9

43.2

34.2

27.6

-

NL2Repo (Pass@1)

3

1

2

8

3

3

4

2

-

ArtifactsBench

66.6

62.6

68.9

71.1

59.1

68.5

58.4

52.5

-

SWE-Bench Pro

46.9

46

51.7

55.6

48.4

55.4

49.7

46.7

-

Terminal Bench 2.01

55.8

45

36.9

62.4

45.2

60.2

54.2

60

-

Context Learning

KORBench

77.2

77

74.2

79.2

73

77.4

73.9

76

-

DeR2 Bench

58.2

57.3

50.3

69

58.9

60.4

66.1

66

-

CL-Bench

21.5

20

25.2

23.9

18.1

22.6

15.6

16.1

-

ToB-Complex Workflows

64.7

68.2

53.4

45

61

64.8

69.2

64.9

-

ToB-Reference Q&A

72.4

62

42.5

63.6

58.9

67.9

68.3

61.3

-

Real World Tasks

HealthBench - Hard

28.3

20

38.6

36.6

10.9

11

15

21.5

-

GDPVal-Diamond

21.3

23.2

11.5

26.9

15.2

20.7

19.4

6.46

-

XPert Bench

64.5

63.3

47.6

53.3

44.7

50.5

53.1

50.1

-

ToB-K12 Education

62.8

63.8

53.7

61.6

50.1

56.2

59.4

59

-

ToB-Compositional Tasks

59.1

54.8

47.5

51.5

57.3

63.6

64.8

57.7

-

ToB-Text Classification

69

64.5

52.7

62.1

64.5

63.9

67.5

61.9

-

ToB-Information Extraction

52

48.4

40.5

44.7

48.3

50.1

49

45.4

-

World Travel (VLM)

12

14

2.7

19.33

2.67

14

8

7.3

-

World Travel (TEXT)

23.3

24

15.3

32.67

10

21.3

14.7

13.3

-

带有 * 的结果引自技术报告。

带有 ‡ 的基准测试在评估时包含了字幕内容。

1 :使用 Terminus2。

带有 ‡ 的基准测试在评估时包含了字幕内容。

1 :使用 Terminus2。